NeRFs vs Photogrammetry: Exploring the Future of 3D Imaging

Introduction to 3D Imaging Technologies

3D imaging technologies have revolutionized the way we capture and visualize the world around us, with applications spanning from virtual production to cultural heritage preservation. Two notable techniques, Neural Radiance Fields (NeRFs) and Photogrammetry, have garnered attention for their ability to reconstruct detailed 3D scenes. This article delves into these technologies, highlighting their principles, differences, and potential applications.

Understanding NeRFs

Neural Radiance Fields, or NeRFs, represent a cutting-edge approach in the domain of 3D imaging, leveraging deep learning to create continuous, detailed 3D representations from a collection of 2D images. Unlike traditional methods, NeRFs do not rely on feature matching across images but instead use a neural network to learn a mapping from 3D coordinates to color and density, enabling the rendering of photorealistic scenes from novel viewpoints. This technology holds promise for applications requiring high levels of detail and realism, such as in film production and virtual reality.

Neural Radiance Field of a radiator fixed to a wall

The Role of Photogrammetry

Photogrammetry, a well-established technique in 3D imaging, constructs 3D models by analyzing multiple 2D photographs taken from various angles. By identifying common points across these images and employing triangulation, photogrammetry can accurately map the 3D structure of objects and environments. This method is particularly valued for its precision and versatility, finding applications in surveying, archaeology, and historical preservation.

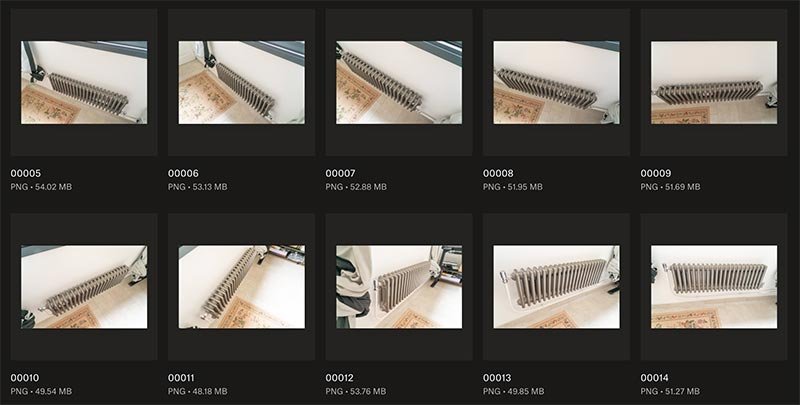

Images from Photogrammetry Dataset of Radiator

NeRFs and Photogrammetry: A Comparative Analysis

While both NeRFs and Photogrammetry offer compelling advantages for 3D reconstruction, they cater to different needs and project requirements. Photogrammetry excels in generating detailed surface meshes with texture maps, making it ideal for applications where tangible, accurate models are needed. NeRFs, on the other hand, shine in rendering complex scenes with dynamic lighting and viewpoints, although they currently fall short in producing exportable mesh models. The choice between NeRFs and Photogrammetry ultimately depends on the specific demands of a project, such as the required level of detail, realism, and the end use of the 3D model.

The Future of 3D Imaging: NeRFs, Photogrammetry, and Beyond

As 3D imaging technologies continue to evolve, the integration of NeRFs and Photogrammetry could pave the way for unprecedented levels of detail and realism in 3D models. Future advancements may see NeRFs enhancing the textural and lighting fidelity of photogrammetric models, while innovations in photogrammetry could streamline the mesh generation process in NeRFs. The complementary strengths of these technologies hold the potential to transform industries ranging from film production to cultural heritage, driving forward the capabilities of virtual reconstructions.

Summary

NeRFs and Photogrammetry represent two powerful, albeit distinct, approaches to 3D imaging. While Photogrammetry offers precise, exportable models ideal for a wide range of applications, NeRFs provide unparalleled realism in scene rendering. The ongoing development and potential integration of these technologies promise exciting advancements in the field of 3D imaging, opening new horizons for virtual production, preservation, and beyond.

Frequently Asked Questions

-

A NeRF, or Neural Radiance Field, is a deep learning technique that creates continuous 3D representations from 2D images, offering photorealistic scene renderings.

-

NeRFs are unlikely to completely replace photogrammetry due to their current limitations in generating exportable meshes, but they may complement each other in future applications.

-

The key difference lies in their approaches: NeRFs use deep learning to infer 3D scenes from images without feature matching, while photogrammetry reconstructs models by triangulating common points across multiple photographs.